If you are in the process of evaluating and selecting the components for a brand new database server to run an OLTP or DW workload on SQL Server 2014 Enterprise Edition, you have several initial choices that you have to make as a part of the decision process.

If you are in the process of evaluating and selecting the components for a brand new database server to run an OLTP or DW workload on SQL Server 2014 Enterprise Edition, you have several initial choices that you have to make as a part of the decision process.

These recommendations are valid for 1C:Enterprise because this system creates an OLTP workload.

Continue reading Recommended Intel Processors for SQL Server 2014 Workloads

Tag Archives: MSSQL

Scheduled operations at the DBMS level for MS SQL Server

Instruction to execute the scheduled operations at the DBMS level.

Information can be applied for the client-server variant of 1С:Enterprise 8 when using DBMS MS SQL Server.

Continue reading Scheduled operations at the DBMS level for MS SQL Server

Compressing 1С:Enterprise database in MS SQL Server

Subject of compressing the 1С databases is currently a quite often discussed. The compression advantages are known – reducing the size of database, reducing the load on the disk subsystem and some acceleration of hard read/write operations. Among the shortcomings – a slight increase of the load on the DBMS server processors due to the consumption of the resources for data compression/decompression. But when using as DBMS MSSQL and DB2 (will not say about Oracle and PostgreSQL, because I do not know) there is one «pitfall» — during restructuring the new tables and indexes are decompressed. It can happen both during configuration update with modification of metadata structure and when testing and fixing IB (re-indexing re-creates only the indexes, and restructuring – both tables and indexes). «The problem» lies in the fact that the compression sign is set individually for each table and index.

Problem solution for MS SQL Server (only for Enterprise-versions 2008/2008R2).

It is possible to catch the moment of new table or index creating using DDL-trigger that is called immediately after execution of CREATE TABLE/INDEX, but before transferring the data by the 1С platform. The trigger of this type can be created both for the specific IB and for the entire server. Trigger creation for IB is not included in the 1С license agreement, so it is the best to create for the entire server, all the more it will allow servicing all the bases at once on this server.

Creating DDL-trigger for the operation of table creation:

CREATE TRIGGER data_compression ON ALL SERVER AFTER CREATE_TABLE AS --Trigger text

Creating DDL-trigger for the operation of index creation:

CREATE TRIGGER index_compression ON ALL SERVER AFTER CREATE_INDEX AS -- Trigger text

To set the compression sign of the pages for the table it is required to execute the code:

ALTER TABLE base name.scheme name.table name REBUILD PARTITION = ALL WITH (DATA_COMPRESSION = PAGE)

To set the compression sign of the pages for the index it is required to execute the code:

ALTER INDEX index name ON base name.scheme name.table name REBUILD PARTITION = ALL WITH (DATA_COMPRESSION = PAGE)

In general, it is already enough to create a workable mechanism which will not allow the 1С platform to create a new table or index without compression, but I wanted more flexibility in the settings :).

Below there is a solution in the form in which we use it. This allowed automatically applying compression for all the databases on the server.

Service base CompressionSetting is created with two tables:

1) Databases – to store the list of bases that are NOT required to compress.

CREATE TABLE dbo.Databases(

name nvarchar(100) NULL,

active int NULL

) ON PRIMARY

From ~200 bases on the server I introduced in this table only one – tempdb:

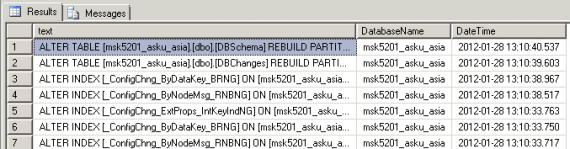

2) Trace – for monitoring of DDL-triggers

CREATE TABLE dbo.trace(

text nvarchar(max) NULL,

DatabaseName nvarchar(max) NULL,

DateTime datetime NULL

) ON PRIMARY

Next, I created 2 DDL-triggers:

1) For tables

CREATE TRIGGER data_compression

ON ALL SERVER

AFTER CREATE_TABLE

AS

DECLARE @SchemaName nvarchar(150),

@ObjectName nvarchar(150),

@DatabaseName nvarchar(150),

@cmd nvarchar(150)

--Get scheme name from the executable command CREATE TABLE

SET @SchemaName = EVENTDATA().value('(/EVENT_INSTANCE/SchemaName)1','nvarchar(150)')

--Get table name

SET @ObjectName = EVENTDATA().value('(/EVENT_INSTANCE/ObjectName)1','nvarchar(150)')

--Get base name

SET @DatabaseName = EVENTDATA().value('(/EVENT_INSTANCE/DatabaseName)1','nvarchar(150)')

--Generate from the received data the required command to set a compression sign for the table

set @cmd = 'ALTER TABLE ' + @DatabaseName + '.' + @SchemaName + '.' + @ObjectName + ' REBUILD PARTITION = ALL WITH (DATA_COMPRESSION = PAGE)'

--Now check the settings – if there is no base in the table CompressionSetting.dbo.Databases with a sign Active = 1, then execute command, otherwise, ignore

IF NOT EXISTS (SELECT 1 AS Expr1

FROM CompressionSetting.dbo.Databases AS T

WHERE (name = @DatabaseName) AND Active = 1)

BEGIN

INSERT INTO CompressionSetting.dbo.trace (text, DatabaseName, DateTime) SELECT @cmd, @DatabaseName, GETDATE()

EXEC (@cmd)

END

ELSE

BEGIN

PRINT 'TEST'

END

2) For indices

CREATE TRIGGER index_compression

ON ALL SERVER

AFTER CREATE_INDEX

AS

DECLARE @SchemaName nvarchar(150),

@ObjectName nvarchar(150),

@TargetObjectName nvarchar(150),

@DatabaseName nvarchar(150),

@cmd nvarchar(150)

--Get scheme name of the executable command CREATE INDEX

SET @SchemaName = EVENTDATA().value('(/EVENT_INSTANCE/SchemaName)1','nvarchar(150)')

--Get index name

SET @ObjectName = EVENTDATA().value('(/EVENT_INSTANCE/ObjectName)1','nvarchar(150)')

--Get table name

SET @TargetObjectName = EVENTDATA().value('(/EVENT_INSTANCE/TargetObjectName)1','nvarchar(150)')

--Get base name

SET @DatabaseName = EVENTDATA().value('(/EVENT_INSTANCE/DatabaseName)1','nvarchar(150)')

-- Generate from the received data the required command to set a compression sign for the index

set @cmd = 'ALTER INDEX ' + @ObjectName + ' ON ' + @DatabaseName + '.' + @SchemaName + '.' + @TargetObjectName + ' REBUILD PARTITION = ALL WITH (DATA_COMPRESSION = PAGE)'

--Now check the settings – if there is no base in the table CompressionSetting.dbo.Databases with a sign Active = 1, then execute command, otherwise, ignore

IF NOT EXISTS (SELECT 1 AS Expr1

FROM CompressionSetting.dbo.Databases AS T

WHERE (name = @DatabaseName) AND Active = 1)

BEGIN

INSERT INTO CompressionSetting.dbo.trace (text, DatabaseName, DateTime) SELECT @cmd, @DatabaseName, GETDATE()

EXEC (@cmd)

END

ELSE

BEGIN

PRINT 'TEST'

END

Immediately after creation of triggers no changes will happen, of course, in the size of the bases. To compress the existing bases, it is possible to use the following methods:

- Write script for alternate search of the tables and indexes and setting for them the compression sign. I did not like this option, because to compress the large tables it is required a lot of time, a base in this case is inflated, and then a very long time is decreased through SHRINK DATABASE.

- Execute a full restructuring through «Verify and repair». It is more quickly by the time, but the base is also inflated and then it would have to be decreased through SHRINK DATABASE.

- The most optimal option, in my opinion, – re-create base through the dt-file. In this case, the ready base will be initially of minimum size and the base loading time with compression is a little different from the loading in a normal mode.

On the admissibility of the application of such compression method I asked the question in the partner conference to which the following comment of Sergei Nuraliev was received:

«…It is possible to assume the position that we believe that the use of this capabilities must be denied or allowed, but with an adequate support (methodological and software). An option to use them without a proper support we consider incorrect, as it leads to the administration problems.»

Thereby, I do not think that the proposed approach must be used massively.

The administrator who decided to use it should clearly understand what is he doing and what consequences might be.